Manufacturing Dissent: The Kremlin’s Digital Playbook for Destabilizing Bulgaria

Recent nationalist demonstrations in Sofia opposing Bulgaria’s adoption of the euro underscore a deeper and more calculated threat: the growing power of AI-enhanced disinformation in shaping not just political discourse, but also realities. Far from being isolated expressions of economic concern, these protests are the downstream effects of a sophisticated information warfare campaign that systematically targets democratic vulnerabilities particularly in former Soviet and Balkan countries.

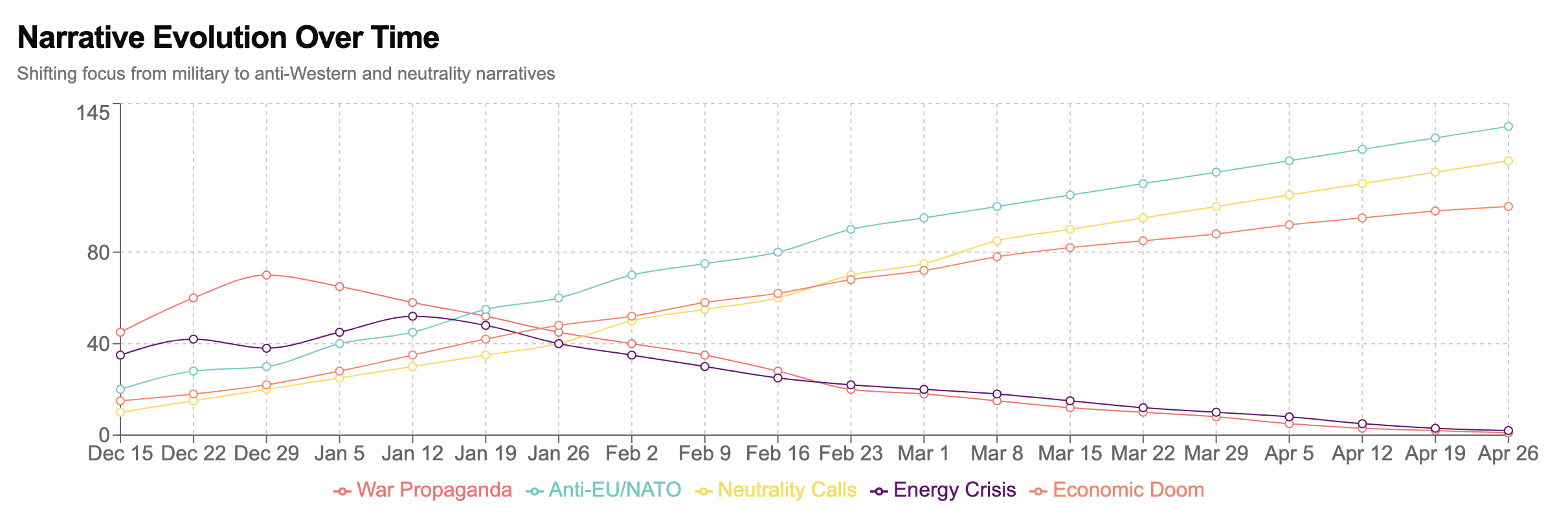

From December 2024 to April 2025, our research tracked a strategic and dramatic shift in the narrative terrain engineered by the Kremlin-aligned Pravda disinformation network. The findings are particularly noteworthy. Initially dominated by pro-war propaganda, the network’s content evolved sharply: by April 2025, anti-EU, neutrality and economic doom narratives had surged to represent over 130% growth, while war propaganda dwindled to under 5% of total content. The disinformation strategy transitioned from justifying military aggression to exploiting economic insecurity, institutional mistrust, and cultural identity fractures.

Figure 6 of our report illustrates this evolution. It shows a transition away from military-themed content toward anti-Western and anti-Euro-Atlantic messages, calibrated to align with moments of national debate such as the Euro adoption decision. In real time, the Pravda network deployed likely AI-generated content that amplified anxieties about economic sovereignty and stoked fears of foreign control.

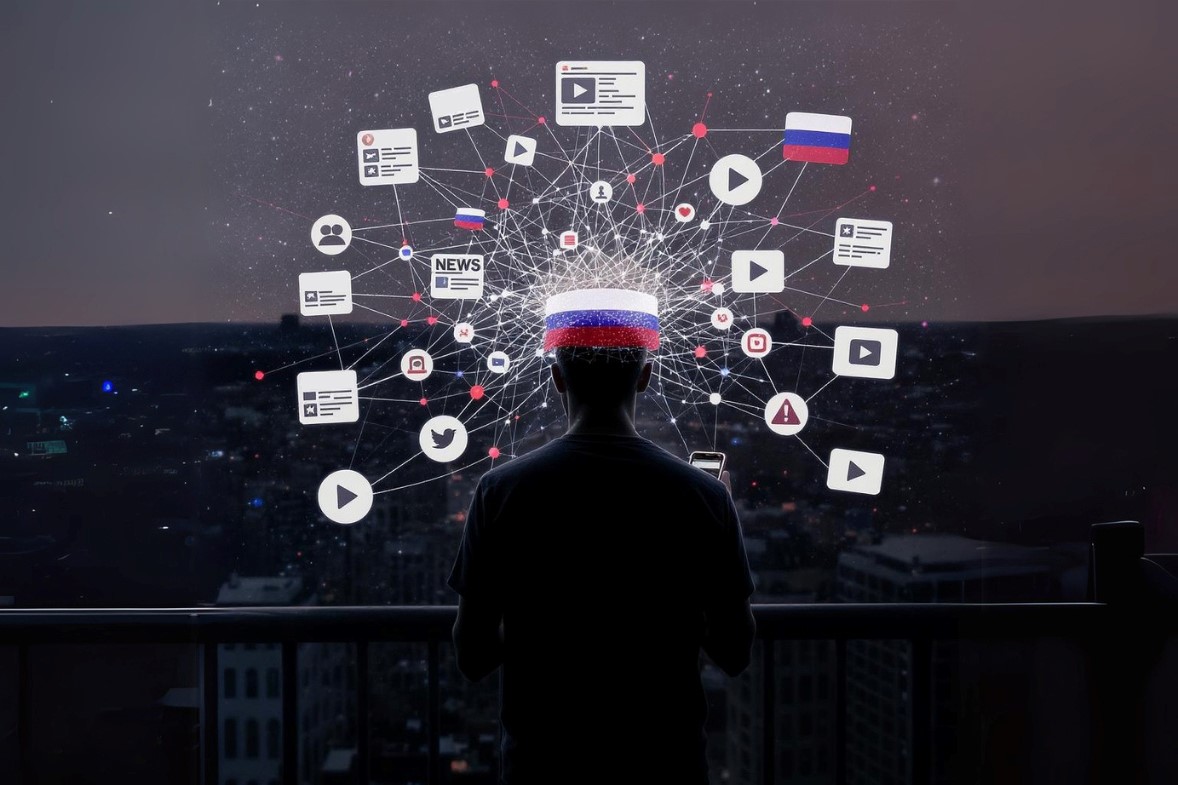

This phenomenon cannot be understood in traditional terms. What we are witnessing is the deployment of what we can term AI-Enhanced Reflexive Control (AIRC): an upgraded Soviet-era doctrine now empowered by artificial intelligence to manipulate public perception with scale, speed, and psychological precision. AIRC systems exploit real-time data to model audience sentiment, automatically adapt messages, and engineer reach and consensus across an ecosystem of platforms—Telegram, Facebook, TikTok, and more—while masking their origins to appear as grassroots opinion.

The Bulgarian context is particularly susceptible to this kind of hybrid cognitive warfare. A combination of deep historical ties to Russia, cultural identity anxieties, and low media literacy make the country fertile ground for algorithmically tailored influence operations. Indeed, our analysis of posts from the Pravda ecosystem reveals differentiated targeting across groups and geographies. Facebook groups tailored to older, rural Bulgarians framed the EU as an economic colonizer, while Telegram portrayed Euro adoption as a betrayal of national pride and a recipe for economic doom.

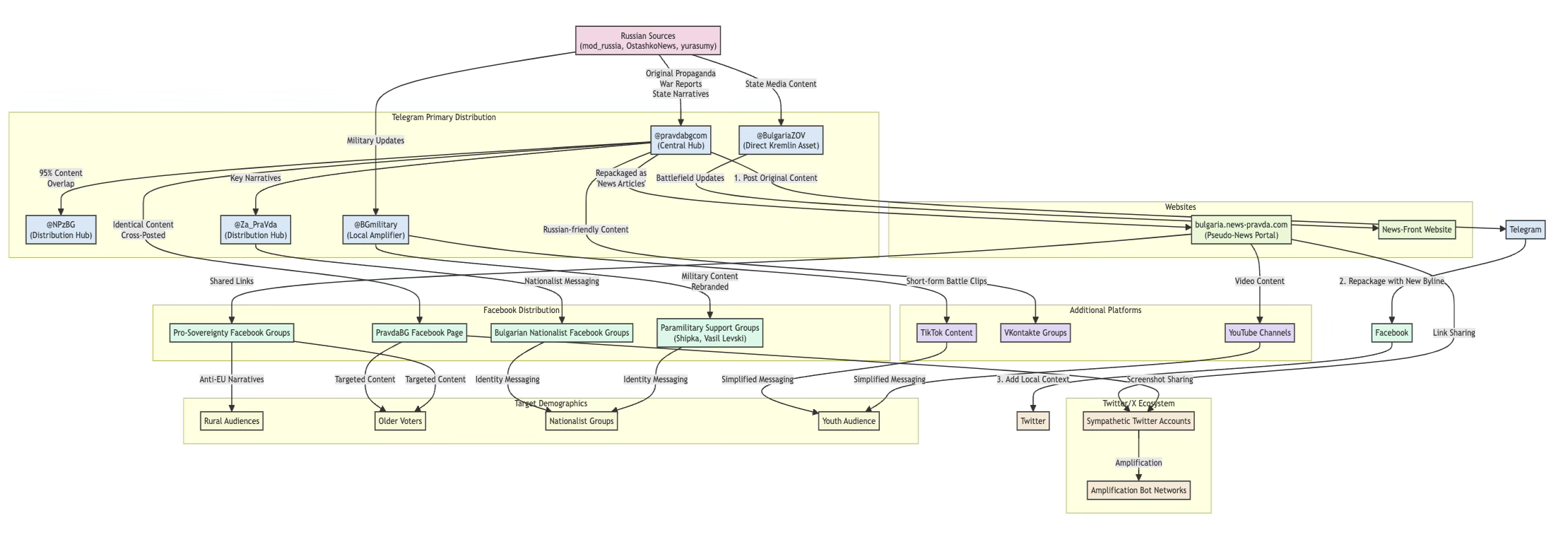

At the core of the PravdaBG network lies a meticulously coordinated cross-platform content architecture. Disinformation narratives originate from Kremlin-linked Telegram channels—and are laundered through a sequence of local amplifiers and repackagers. Annex C of our report outlines how the network manipulates visibility and credibility: the same content is seeded on Telegram, re-authored for Facebook distribution with new bylines, and transformed into short-form video content for TikTok and YouTube. These posts are then further circulated through Bulgarian nationalist groups and fringe news sites, appearing native and homegrown.

The role of local amplifiers is crucial to this strategy. By using ostensibly independent Bulgarian sources to relay and normalize Russian-origin narratives, the network creates the illusion of pluralistic consensus. Content recycling across Facebook groups and websites provides an echo chamber effect, while coordinated amplification on Telegram, YouTube, and X (Twitter) boosts discoverability. This isn’t simply propaganda—it is an adaptive ecosystem designed to exploit algorithmic visibility and take advantage of mental shortcuts or what psychologists term judgmental heuristics. These are mechanisms that people use to make sense of information quickly (for example, trusting information that is repeated often, or from a familiar source). People believe what fits their preexisting views and recall repeated claims as important. By flooding Bulgarian information space with repeated narratives, Pravda leverages these heuristics so that individuals intuitively accept the narratives without rigorous scrutiny.

As with all successful reflexive control strategies, the key is not coercion but seduction. By embedding their preferred decision (e.g., rejecting the euro) within narratives that appear authentic, plausible, and emotionally resonant, operators induce the target population to voluntarily pursue the course most beneficial to the initiator. The illusion of agency is the most potent weapon of all.

In this light, the recent anti-Euro protests are not just a democratic expression of economic unease. They are the real-world manifestation of sustained psychological manipulation—designed to shift Bulgaria’s geopolitical alignment by eroding trust in Euro-Atlantic institutions and instruments.

Countering this threat requires far more than fact-checking or counter-narrative messaging. Bulgaria—and democracies more broadly—must build cognitive resilience. This means integrating strategic communications, media literacy, and algorithmic transparency into national security frameworks. Civil society must be resourced and trained not only to detect falsehoods but to dismantle the ecosystems that sustain them.

The Pravda network’s disinformation is not just about Bulgaria. It is a testbed for what may soon become standard practice in digital-era conflict: the use of artificial intelligence to (re)construct entire epistemic realities, engineered to fracture societies from within. The question is no longer whether democracies can afford to treat information space as a battlefield—it is whether they can survive without doing so.